Run a task in a VM¶

This example shows how to run a one-shot task in a VM via API. The CLI can also act as a client to the API during testing.

Use-cases could include:

- Running an AI coding agent in a contained environment without risking your whole workstation

- Starting on-demand IDEs for pull request development or review

- Autoscaling Kubernetes nodes - added and removed on demand

- Running a CI build or compiling untrusted customer code

- Starting a temporary service such as a database for end to end testing

- Cron jobs, batch jobs, and serverless functions

One-shot tasks are VMs that are launched on demand for a specific purposes. But there's no limit on the lifetime of these VMs, they can run for any period of time - be that 250ms to process a webhook, 48 hours to run some fine-tuning, or several weeks. Just bear in mind that if you shut down or close Slicer, they will also be shut down and destroyed.

Watch a demo of the tutorial to see how fast it is to launch microVMs for one-shot tasks.

Tutorial¶

Create an empty hostgroup configuration.

For the fastest possible boot times, use ZFS for storage.

If you don't have ZFS set up yet, you can simply replace the storage flags with something like:

--storage: image

Create tasks.yaml slicer config:

slicer new buildkit \

--cpu 1 \

--ram 2 \

--count 0 \

--storage zfs \

--persistent false \

> tasks.yaml

Now start up slicer:

sudo -E slicer up ./tasks.yaml

Now set up a HTTP endpoint using a free service like ReqBin.com or webhook.site.

Write a userdata script to send a POST request to your HTTP endpoint on boot-up, then have it exit.

Save task.sh:

cat > task.sh <<'EOF'

#!/bin/bash

curl -i -X POST -d "$(cat /etc/hostname) booted\nUptime: $(uptime)" \

https://webhook.site/f38eddbf-6285-4ff8-ae3e-f2e782c73d8f

sleep 1

sudo reboot

exit 0

EOF

Then run your task by booting a VM with the script as its userdata:

curl -isLSf http://127.0.0.1:8081/hostgroup/task/nodes \

-H "Content-Type: application/json" \

--data-binary "{

\"userdata\": $(cat ./task.sh | jq -Rs .)

}"

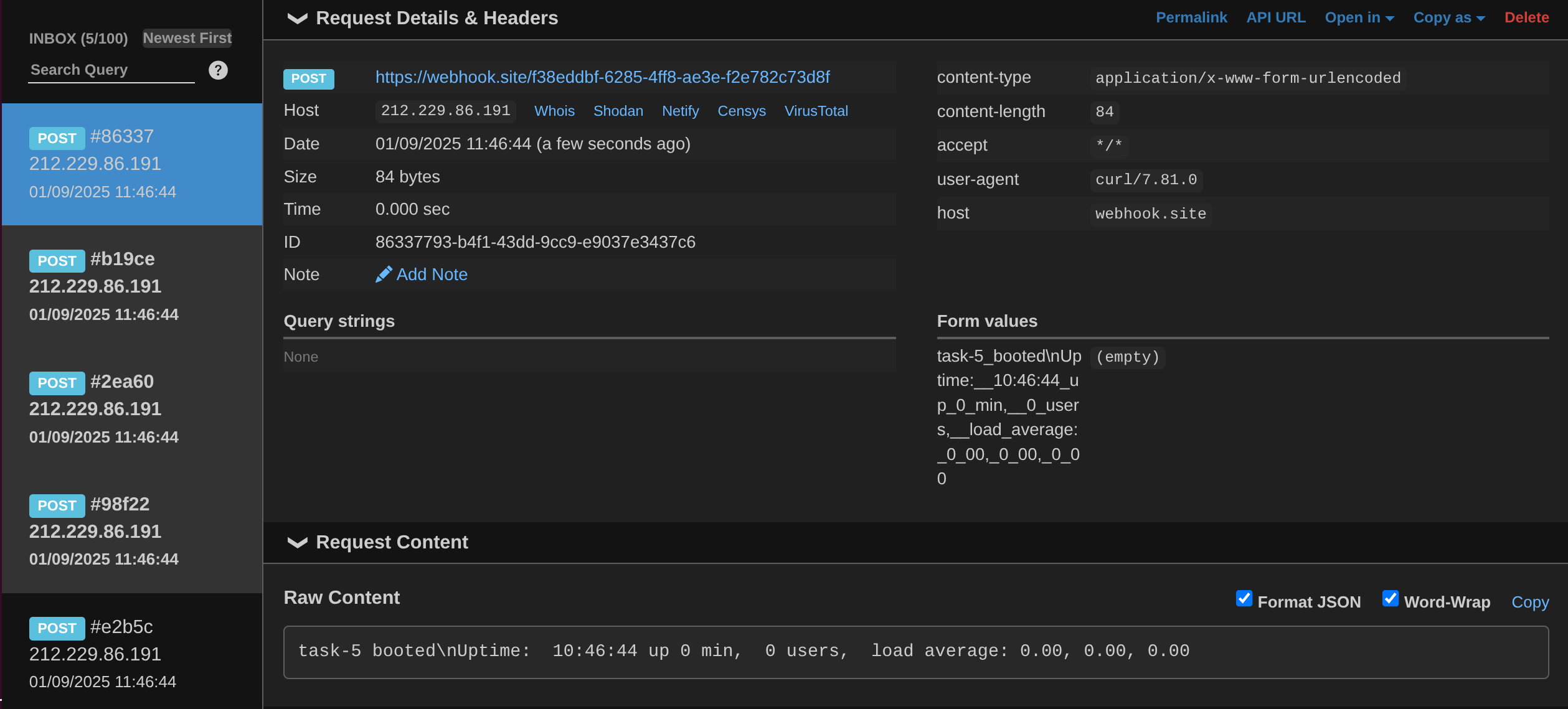

Check your HTTP bin for the results.

You can also run this in a for loop:

for i in {1..5}

do

curl -sLSf http://127.0.0.1:8081/hostgroup/task/nodes \

-H "Content-Type: application/json" \

--data-binary "{\"userdata\": $(cat ./task.sh | jq -Rs .)}"

done

Each of the 5 tasks that executed and exited, posted to the endpoint

Launch a task from the CLI¶

The slicer CLI can act as a HTTP client to the REST API, which makes it a bit easier for initial exploration:

for i in {1..5};

do

slicer vm add \

task \

--api http://127.0.0.1:8081 \

--userdata-file ./task.sh

done

The output will be as follows:

VM created

Hostname: task-1

Group: task

IP: 192.168.138.2/24

Specs: 1 vCPU, 2GB RAM, GPUs: 0

Persistent: false

Created: 2025-09-09T09:45:27+01:00

When using Cloud Hypervisor for GPU support, the --gpus flag can be passed to allocate a number of GPUs from the host into the guest VM.

This would be useful for batch inference, or AI jobs that benefit from direct access to a local LLM.

Optimise the image for start-up speed¶

After various Kernel modules are loaded, and the system has performed its self-checking, your code should be running at about the 2.5s mark, or a bit earlier depending on your machine.

To optimise the boot time further for one-shot use-cases, the SSH host key regenerate step that is present on start-up. It can add a few seconds to the boot time, especially if entropy is low on your system.

You can derive your own image to use, with this disabled:

FROM ghcr.io/openfaasltd/slicer-systemd:5.10.240-x86_64-latest

RUN systemctl disable regen-ssh-host-keys &&

systemctl disable ssh && \

systemctl disable sshd && \

systemctl disable slicer-vmmeter

After SSH is disabled, the only way to debug a machine is via the Slicer agent using slicer vm shell to get a shell.

You can also disable slicer-agent (not actually a full SSH daemon), however the slicer vm commands will no longer work.

If you publish an image to the Docker Hub, make sure you include its prefix i.e. docker.io/owner/repo:tag.

Cache the Kernel to a local file¶

Rather than downloading an extracting the Kernel on each run of Slicer, you can extract a given vmlinux file and tell the YAML file to use that.

This is preferred for a long-running host, where we need to keep the root-filesystem image and Kernel in sync.

The Kernel must agree with the root filesystem image, which means using a proper tag and not a latest tag.

Why? The Kernel is built as a vmlinux, however its modules are packaged into the root filesystem image.

Run the following:

$ arkade get crane

$ crane ls ghcr.io/openfaasltd/actuated-kernel:5.10.240-x86_64-latest

5.10.240-x86_64-3d7a67d1683b524b4128ad338f90b1da710f2fd9

5.10.240-kvm-x86_64-3d7a67d1683b524b4128ad338f90b1da710f2fd9

5.10.240-x86_64-ea04b63b9117c966a57e17e1bc1bfcf713cd6276

5.10.240-x86_64-bb71bdd1cd06bad2cc11f8ab3f323c3f19d41c8b

5.10.240-x86_64-2f2ebc0bbefe128aa3061e6ea6806cbcdc975208

6.1.90-aarch64-2f2ebc0bbefe128aa3061e6ea6806cbcdc975208

6.1.90-aarch64-5c59e9b9b08eea49499be8449099291c93469b80

5.10.240-x86_64-5c59e9b9b08eea49499be8449099291c93469b80

Pick a stable tag for your architecture i.e. x86_64-SHA or aarch64-SHA, then run:

$ arkade oci install ghcr.io/openfaasltd/actuated-kernel:5.10.240-x86_64-2f2ebc0bbefe128aa3061e6ea6806cbcdc975208 --output ./

$ ls

vmlinux

Next, find the matching root filesystem image:

$ crane ls ghcr.io/openfaasltd/slicer-systemd

Use it in your YAML file, replacing kernel_image with kernel_file:

kernel_file: "./vmlinux"

image: "ghcr.io/openfaasltd/slicer-systemd:5.10.240-x86_64-2f2ebc0bbefe128aa3061e6ea6806cbcdc975208"